I recently had the opportunity to attend a presentation on the " justice " and " injustice " of algorithms. The concept of bias in the algorithms was raised (due to decompensated, insufficient data, etc.) and it was raised how the algorithms should be trained so that they do not pose differences in “ sensitive ” variables (age, sex, race ...) . At one point the speaker commented something like “ … because, if we are talking about predicting the solvency of applicants for a loan, there should be no differences between men and women, right? ”, Awaiting the acquiescence of the public. The thought that went through my head was “ Well I don't know, I would need data. Because if we were talking about predicting a driver's accident risk, it could also be expected that there would be no difference between men and women. Or if we were talking about the brand of a long-distance runner, it could also be expected that there would be no differences between runners of what we call 'white race' and those of 'black race'. Or if we were talking about the estimation of a person's life, it could also be expected that there would be no differences between men and women ”. But the reality is stubborn, and women have fewer accidents than men, black runners have much better marks than white runners, and men live less than women. I quickly came to the conclusion that that presentation was not dedicated to the justice and injustice of algorithms, but to what is now interpreted as " politically correct " or " incorrect ".

The problem with forcing an algorithm to make its result "politically correct" is that we may simply be turning our backs on reality. Without denying in any way that under certain conditions positive discrimination is desirable, the risk we run in trying to impose an ideology on reality can be high. I imagined a doctor analyzing the possibility that a tumor that affects men and women differently is malignant or benign, is in one stage of development or another, that is of one type or another. A quick search on the Internet offers us an infinite number of cases: an example could be the " Study of glioblastoma highlights sex differences in brain cancer ", from the National Cancer Institute (first result that the search offered me). In my opinion, ensuring that so-called "fairness" of the algorithm can be the biggest bias we can commit and - in cases like the one mentioned above for brain cancer - we may even be putting a person's life in danger.

So, back to the initial question of do men and women offer the same creditworthiness? my answer was still “ I don't know, as fair or unfair as it may seem. Perhaps they or they are more or less cautious, more or less practical, or better or worse managers. I don't know, nor do I want to prejudge them ”. And this without counting on the fact that no one would rely on a univariate analysis to make a decision of this type. Reality is infinitely more complex and the way in which a variable such as gender or race influences a prediction is much more subtle than it may appear. In a case like this, we may not be putting anyone's life in danger, but we are running the risk of distorting reality for the sake of “social justice”.

I admit that after these thoughts went through my head, I stopped paying attention to the presentation.

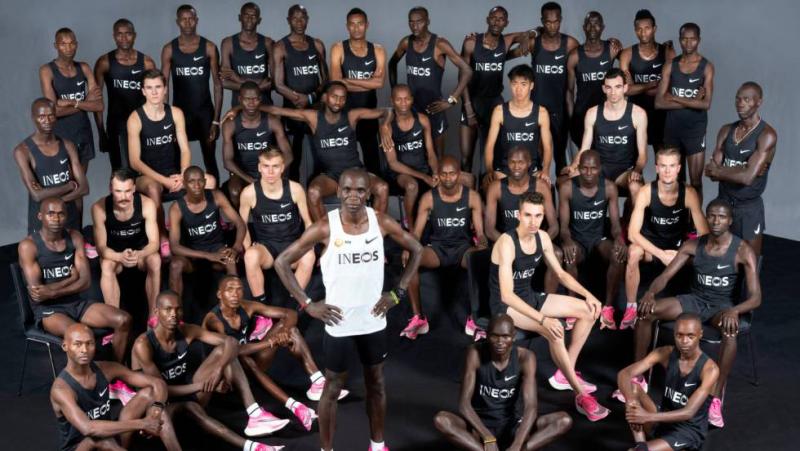

Image: Kipchoge, with the athletes who accompanied him in the marathon on October 12, 2019, Reuters Agency