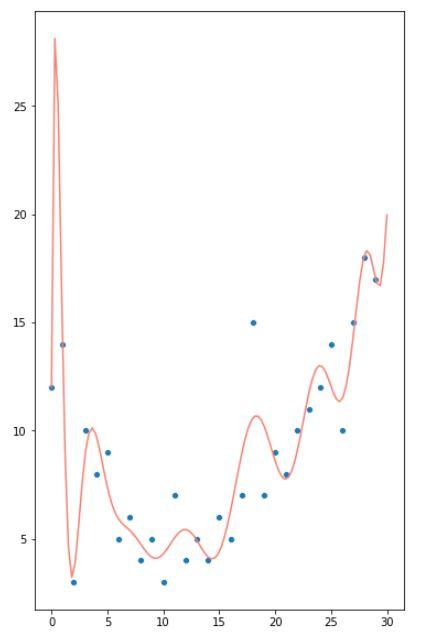

Model overfitting is a common problem in machine learning, where a model is trained to perform well on a specific training dataset, but performs poorly on unseen data. This occurs when the model is too complex and has learned the noise in the training data, rather than the underlying relationship.

Overfitting can lead to poor generalization and poor performance on new, unseen data. It is important to prevent overfitting in order to build a robust and accurate model.

There are several ways to prevent overfitting:

There are several ways to prevent overfitting:

-

Use a simpler model: A model with fewer parameters is less likely to overfit the data. By using a simpler model, we can reduce the risk of overfitting and improve the model's generalization ability.

-

Use cross-validation: Cross-validation is a technique that involves dividing the training dataset into multiple folds and training the model on different combinations of these folds. This allows us to evaluate the model's performance on unseen data and identify if it is overfitting.

-

Use regularization: Regularization is a technique that imposes constraints on the model to prevent it from overfitting. This can be done by adding a penalty term to the loss function during training.

-

Use more data: The more data we have, the less likely our model is to overfit. By increasing the size of the training dataset, we can reduce the risk of overfitting and improve the model's generalization ability.

-

Early stopping: Early stopping is a technique that involves interrupting the training process when the model's performance on the validation dataset starts to degrade. This can help prevent overfitting by avoiding the model from learning the noise in the training data.

By following these techniques, we can prevent overfitting and build a robust and accurate model. It is important to keep in mind that overfitting can occur at any stage of the machine learning process, and it is important to be vigilant in order to prevent it.