Decision trees are a popular machine learning algorithm that is used for both classification and regression tasks. They are a type of supervised learning algorithm that can be used to predict a target variable based on one or more input variables.

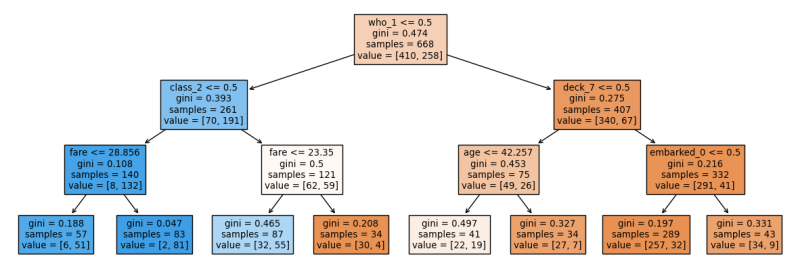

The basic structure of a decision tree is a tree-like graph, with each internal node representing a decision or test on an input variable, and each leaf node representing a prediction or class label. The algorithm works by recursively partitioning the input space into smaller regions, called branches, based on the values of the input variables. The partitioning is done in such a way that each branch corresponds to a specific value or range of values for the input variables, and the goal is to maximize the separation between the different classes or target values.

One of the key advantages of decision trees is that they are easy to interpret and understand. The tree structure provides a clear visual representation of the decisions and logic used to make predictions, making it easy for humans to understand the reasoning behind a prediction. Additionally, decision trees are relatively easy to implement and can be applied to a wide range of problems, making them a popular choice for many machine learning tasks.

Some common applications of decision trees include:

- Classification tasks such as identifying spam email or fraudulent credit card transactions

- Regression tasks such as predicting stock prices or house prices

- Medical diagnosis and treatment planning

- Image and speech recognition

- Natural Language Processing

There are different algorithms for building decision trees. Some popular ones include ID3, C4.5, C5.0, and CART. Each algorithm has its own strengths and weaknesses, and the choice of which algorithm to use depends on the specific application and the type of data being analyzed.